Everything to Understand About OpenAI’s New Text-to-Video Generator, Sora

A tool utilizing machine learning that converts text prompts into elaborate videos has sparked both enthusiasm and doubt.

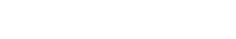

At a quick glance, the footage resembles scenes from a music video or a sleek car advertisement: a woman wearing sunglasses confidently strolls through a city street at night, enveloped by pedestrians and illuminated signs. Her dress and gold hoop earrings gracefully move with each step. However, it’s not a recording for a TV commercial or music video. In reality, it doesn’t depict anything authentic. The woman and the street are entirely fictional, existing only on the screen.

OpenAI’s latest generative artificial intelligence (GAI) tool, Sora, has the capability to generate an entire video from a simple still image or a concise written prompt. In just the time it takes to grab a burrito, Sora can produce up to a minute of remarkably realistic video. This tool follows the success of OpenAI’s previous creations like DALL-E and ChatGPT.

Sora was unveiled by OpenAI on February 15, but its public release is still pending. Currently, access to the tool is restricted to a chosen set of artists and “red-team” hackers. These individuals are conducting tests to explore both the positive applications and potential misuse of the generator. OpenAI has, however, provided a glimpse into Sora’s capabilities by sharing several sample videos through an announcement blog post, a concise technical report, and the profile of CEO and founder Sam Altman on X (formerly Twitter).

In terms of both duration and realism in its output, Sora represents the latest advancements in AI-generated video. Jeong Joon Park, an assistant professor of electrical engineering and computer science at the University of Michigan, expressed surprise at the high quality exhibited by Sora. Just seven months ago, Park had mentioned that he believed AI models capable of creating photorealistic video from text were a distant possibility, requiring significant technological advancements. However, he now acknowledges that the progress made by video generators, especially with the quality of Sora, has surpassed his expectations, a sentiment shared by others in the field.

Ruslan Salakhutdinov, a computer science professor at Carnegie Mellon University, expressed being “a bit surprised” by the quality and capabilities of Sora. Salakhutdinov, known for developing other machine-learning-based video generation methods, finds Sora to be “certainly pretty impressive.”

The introduction of Sora highlights the remarkably swift progress in specific AI advancements, propelled by substantial investments amounting to billions of dollars. However, this rapid pace is intensifying concerns about potential societal repercussions. Tools like Sora pose a threat to the livelihoods of millions in various creative sectors and raise significant concerns as potential amplifiers of digital disinformation.

WHAT SORA CAN DO

Sora has the capacity to generate videos lasting up to 60 seconds, and OpenAI suggests users can extend this duration by instructing the tool to produce additional clips sequentially. This achievement is noteworthy, as previous generative artificial intelligence (GAI) tools faced challenges in maintaining consistency between video frames, let alone across different prompts. Despite its impressive capabilities, it’s important to note that Sora does not signify a significant breakthrough in machine-learning techniques. According to experts like Jeong Joon Park and Ruslan Salakhutdinov, Sora’s algorithm is nearly identical to existing methods, with the main difference being the scale-up on larger data and models. Described as a “brute-force approach,” it lacks novelty in terms of technique.

Simply put, Sora is an extensive computer program designed to link text captions with relevant video content. Technically, it operates as a diffusion model, akin to various other AI tools used for generating images, incorporating a transformer encoding system similar to ChatGPT’s. Through an iterative process involving the elimination of visual noise from video clips, developers trained Sora to generate outputs based on text prompts. The key distinction between Sora and image generators lies in its approach: instead of encoding text into static pixels, it translates words into temporal-spatial blocks that collectively form a complete video clip. Similar models, such as Google’s Lumiere, operate in a comparable fashion.

OpenAI has not disclosed extensive details about the development or training of Sora, and the company has chosen not to address most inquiries from Scientific American. Nevertheless, experts, including Park and Salakhutdinov, believe that the model’s capabilities stem from extensive training data and a substantial number of program parameters running on powerful computing resources. OpenAI has stated that it utilized licensed and publicly available video content for training, but some computer scientists speculate that synthetic data generated by video game design programs, such as Unreal Engine, might also have been employed. Salakhutdinov concurs with this speculation, pointing to the exceptionally smooth appearance of the output and certain generated “camera” angles. Despite being deemed “remarkable,” Sora is acknowledged to have imperfections, including a resemblance to the artificiality found in video games.

Upon closer examination of the video featuring the woman walking, certain discrepancies become apparent. The movement of the bottom of her dress appears somewhat rigid for fabric, and the camera pans exhibit an oddly smooth quality. A closer view reveals a splotchy pattern on the dress that was not present initially. Various inconsistencies emerge, such as a missing necklace in certain shots, shifts in the fasteners on the leather jacket’s lapels, and changes in the jacket’s length. These discrepancies are present in the videos shared by OpenAI, even though it’s likely that many of these examples were selectively chosen to generate excitement. Some clips depict sudden disappearances or duplications of entire individuals or pieces of furniture within a scene.

POSSIBILITIES AND PERILS

Hany Farid, a computer science professor at the University of California, Berkeley, who expresses enthusiasm for Sora and similar text-to-video tools, anticipates that if AI video progresses similarly to image generation, the identified flaws will become less frequent and more challenging to detect. He envisions the potential for “really cool applications” that empower creators to access their imagination more effortlessly. Furthermore, this technology has the potential to reduce barriers to entry for filmmaking and other typically costly artistic pursuits.

Siwei Lyu, a computer science professor at the University at Buffalo, describes the development of tools like Sora as something AI researchers have been dreaming of, considering it a significant scientific achievement. However, while computer scientists may see potential, many artists are likely to perceive the threat of intellectual property theft.

Sora, like its predecessors in image generation, likely incorporates copyrighted material in its training data, making it prone to reproducing or closely imitating copyrighted works and presenting them as its own original generated content. Brian Merchant, a technology journalist and author, has identified at least one instance where a Sora clip appears notably similar to a video likely included in its training dataset, featuring a distinctive blue bird against a green, leafy background.